AI Coding for Lazy Developers

Atul Jalan

Follow4 min read

May 27, 2025

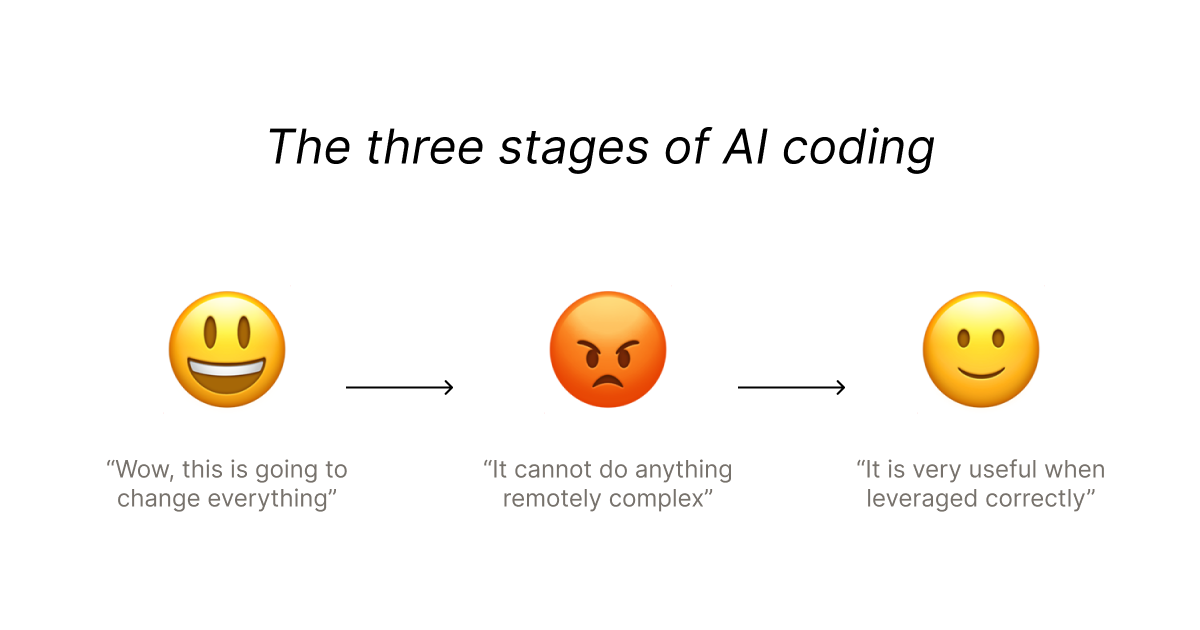

After years of AI coding, I've realized that success with AI is about developing intuition for when it works well, and when it doesn't. It will one-shot an advanced rate limiter, then fail egregiously to help you debug a basic useEffect bug.

As a lazy developer, my goal is to use AI for the quick wins where I'm confident it will work well. The kinds of tasks where I don't need to setup complex workflows and rules and spend 10 minutes writing a prompt.

This essay is a summary of my learnings.

Green-field tasks (Excellent)

Utilities like rate limiters, date formatting, array transformations, etc.

Base UI components like inputs and selects

Regex formulas

In these situations, use AI religiously to one-shot the entire thing

For the best outcome, write the function or class signature yourself and have the AI fill it in. This will maximize chances that the LLM will adhere to your vision of how the final product should work. For maximum speed, have the model generate just the signature, edit to your liking, then ask it to write the actual code.

Pattern Expansion (Great)

LLMs are great at repeating existing patterns - especially once you've written the first few examples. This works surprisingly well even if the pattern is quite unique to your codebase, since LLMs are fundamentally trained to model and reproduce patterns in data.

Add more variants to a component once the base exists (e.g. more button types)

Generate additional endpoints or models following existing conventions

Expand schema definitions in an OpenAPI or JSON Schema

How formulaic is the task? Less nuance is better here. You want tasks where a clear set of patterns are repeated every time, instead of conditionally repeated based on other aspects of the data.

How organized are the examples? If this is a pattern you will repeat a lot (e.g. API endpoints), it's worth the time to create 2-3 excellent examples that you can throw into context every time.

Documentation (Great)

LLMs are great at producing well-written documentation (e.g. docstrings) for your methods and classes. This task works well because it plays to a core strength of LLMs: compression. Just as they're trained to pack vast amounts of knowledge into a small set of weights, they can also compress logic from lots of code into a high-level explanation.

LLMs are further aided by existing language conventions for how to write docstrings and comments, leaving them less room to freestyle and potentially mess up.

Code Explanation (Good)

LLMs are reasonably effective at summarizing what blocks of code do, even when the code is quite long and spans multiple files. The summaries are rarely perfect, but they tend to be directionally correct, which is often enough to accelerate understanding.

Don't waste time formatting the prompt or providing tons of additional context. The increase in response quality is often minimal.

Obviously, never trust the explanation word-for-word. The summaries will almost always skip over sections of the source code and confidently misstate logic in a highly believable way. Use this capability as a jumping-off point before diving in, not as the final word.

Technical Spec Review (Good)

Ask LLMs to review your technical planning docs and point out possible issues and edge cases you may not have thought of.

These tasks enable LLMs to map your specific task to the generalized equivalent and pattern-match that against their vast training set. In contrast, asking LLMs to write the document itself will usually perform poorly since the LLM has to map the generalized task to your specific context.

Even if the majority of its recommendations are irrelevant, there will usually be at least one or two points that are worth considering and addressing in the spec.

Debugging (Bad)

LLMs are poor primary tools for debugging. They struggle in situations where the root cause is unclear and context is fragmented. The worst part is that they will confidently hallucinate incorrect solutions, burning your time and spiking your cortisol.

Debugging is generally a process of exploration. You start with minimal knowledge and slowly gain understanding through iterative investigation. LLMs, on the other hand, perform best when given comprehensive context and asked to produce clear outputs.

Multi-file edits (Terrible)

In my experience, success rates drop sharply as soon as the requested edit spans multiple files. I've tested this by comparing how an LLM performs when asked to make an edit across multiple files vs. those same files combined into one larger document. It always does significantly better in the latter case.

I've found it's faster to decompose your task into multiple requests to edit single files. This simplifies the contextual burden to the LLM, and the cognitive load to you as the reviewer.

Conclusion

For me, success with LLMs has had less to do with prompting and more to do with task selection. This has been in contrast to the conventional wisdom that LLM performance hinges almost entirely on the precision and depth of the prompt.

No doubt, there are much more sophisticated workflows for using LLMs. My goal has been to optimize time investment to successful results, not to engage in an exhaustive search for the perfect setup. Besides, the landscape is evolving far too quickly to make such a quest worthwhile.

While online discussion on AI coding skews to the extreme (either AI-first or AI-never), I think most developers are part of a silent majority with a more measured approach. To that group: publish your workflows! There's far too much noise from influencers tweeting about how they're commanding an army of AI agents to build 7 apps in a weekend, and not enough from developers at mature companies explaining how they're using AI coding on real projects in large codebases. Hopefully this guide helps tip the balance in that direction.

The Hidden Complexity of Scaling WebSockets

WebSockets are at the very core of Compose. Learn how we scaled them to support 1000s of concurrent users.

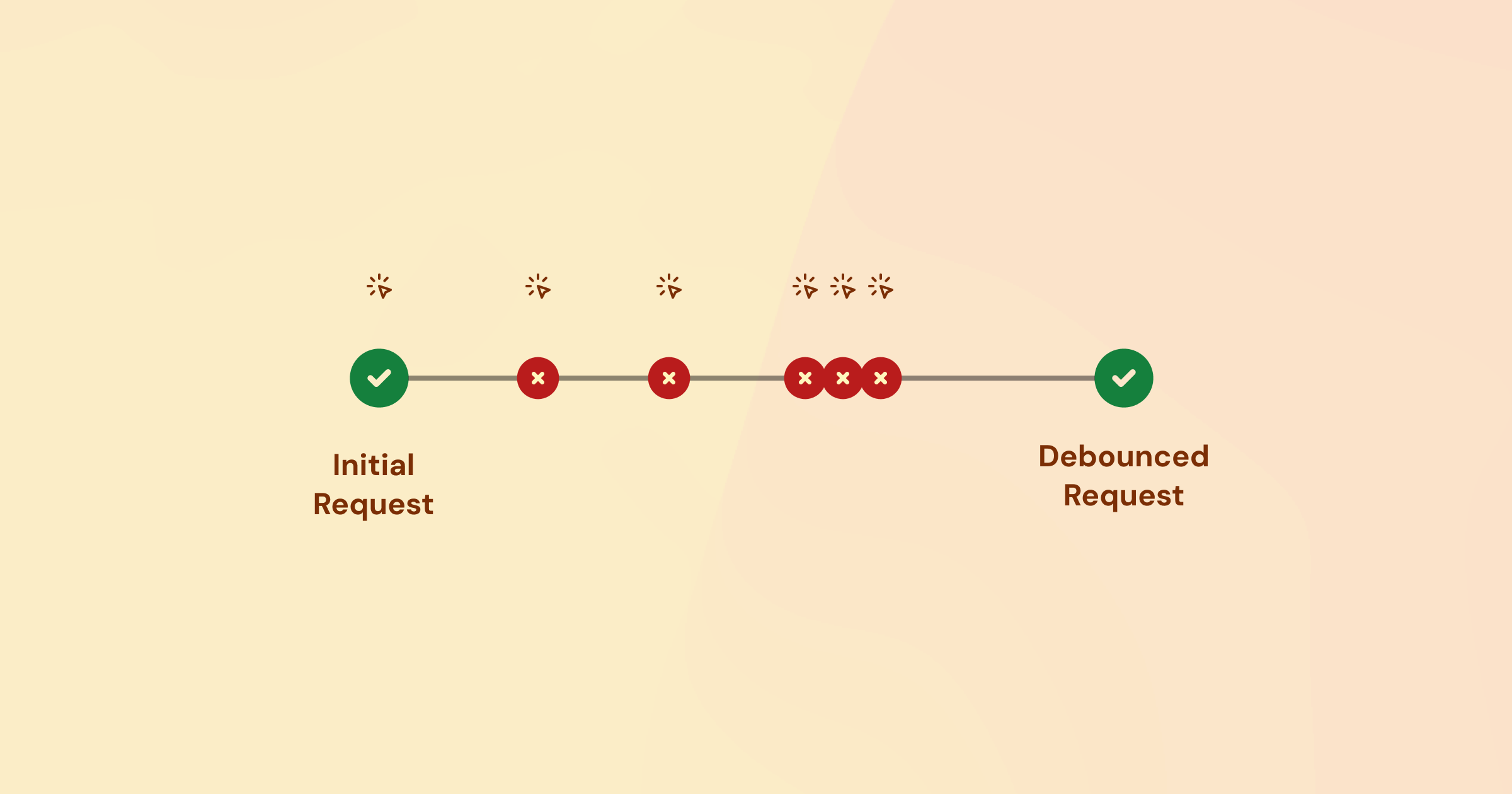

Improving UI performance by optimizing our debouncer

Peek into the internals of Compose's UI rendering engine.

Subscibe to our developer newsletter to get occasional emails when we publish new articles and updates.

2026 Compose. All rights reserved.