Improving UI performance by optimizing our debouncerBoosting performance with a smarter debouncer

Atul Jalan

Follow3 min read

January 17, 2025

If you're reading this, you're probably already familiar with Compose. If not, here's a summary: Compose is a platform for turning backend logic into shareable web apps. You can use our SDKs to connect your logic to simple UI methods that render in a hosted dashboard.

Setting context

We released Compose last year with the intention of letting engineers build simple tools and dashboards from a library of components like tables, charts, forms, etc.

This worked well enough, but power users started asking for more. Instead of building simple dashboards, they wanted to build more complex applications: tools to label data, manage feature flags, and more.

page.update(). Developers could call this method anytime, and Compose would figure out what's changed in the data and update the UI accordingly.Today, I want to discuss how we built that method - and how we optimized it to remain performant at scale.

The problem

page.update() is simple for the developer, making the UI actually update is quite complex. As you call SDK methods to render tables, charts, and other components, Compose builds a UI tree to represent the page. Whenever you want to update a component, we:- Generate a new UI tree

- Diff it against the previous one

- Send instructions about what to update over the network to the browser

This is a computationally expensive process, and not one we want to be doing often. Hence, one big issue we have to handle is situations where the developer wants to rapidly update the UI thousands of times. For example:

let data = await fetchData({ rows: 1000 });

page.add(() => ui.table(data))

data.forEach((_, idx) => {

data[idx] = { ...data[idx], newField: "value" };

page.update();

})The above code requests 1000 page updates to the UI in just a couple milliseconds. Actually performing 1000 updates in that time would be impossible. It'd likely crash whatever server the SDK is running on. To solve this, we need a way to moderate bursts of requests. In other words, we need to debounce.

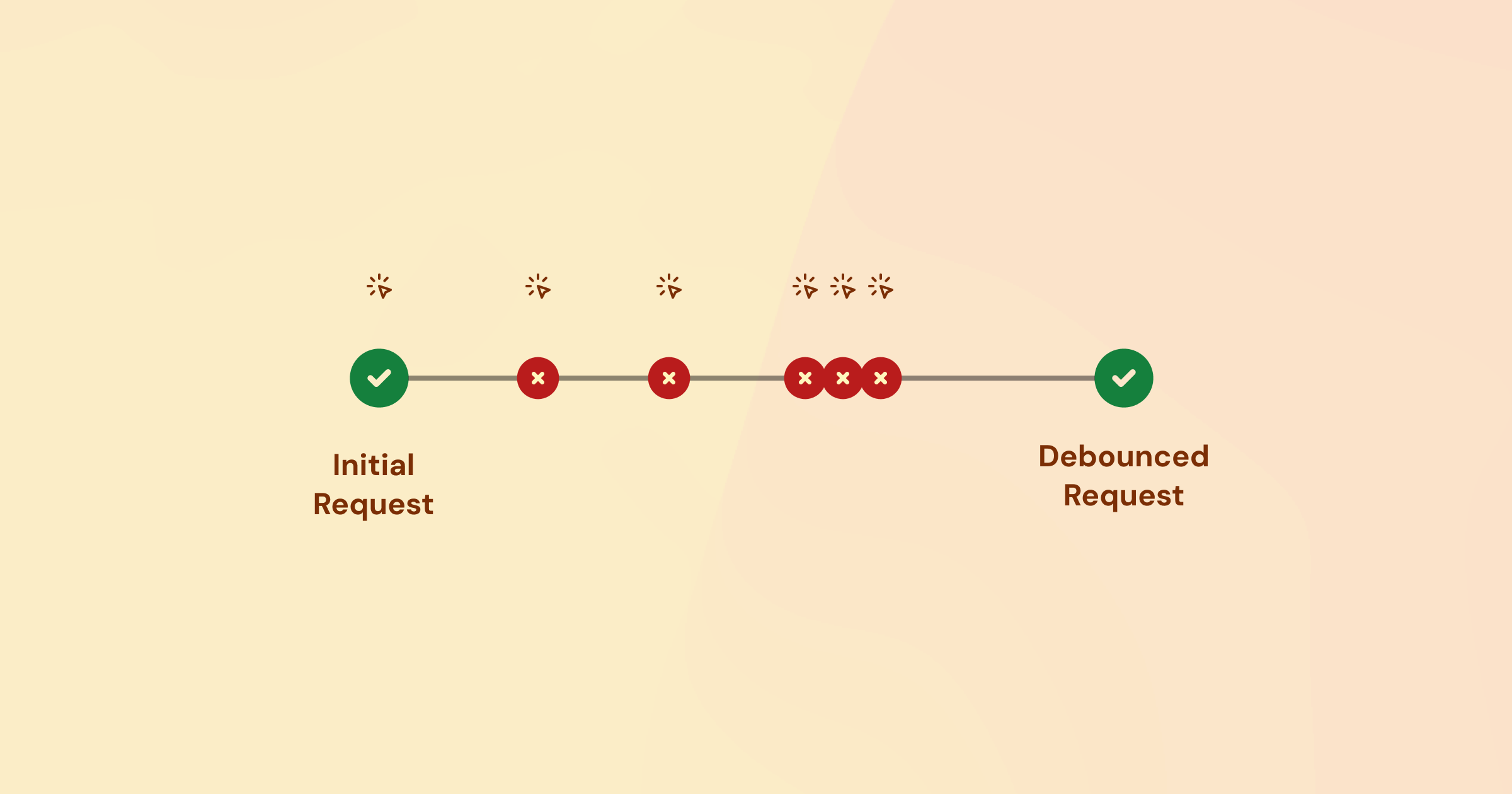

Traditionally, debouncers implement a small artificial delay to limit the frequency of requests. New requests reset the delay, and the debounced function is only called once the delay has passed.

This is a great solution for most situations, but it introduces unacceptable latency for our use case. Users expect their apps to be responsive, and Compose delivers on this by keeping average update latency below 50ms. Even a 5ms delay from the debouncer would slow down updates by 10%.

We need to find a way to debounce without introducing latency, while still ensuring that the UI is updated with the latest data.

A smarter debouncer

- When

page.update()is first called, we run it immediately. - Afterwards, we begin a batching period where subsequent updates within that period are batched into a single request.

- The batching period ends once some specified amount of time has passed since the last request (i.e. the burst of updates has ended).

page.update() calls to be spaced out, this approach allows us to avoid introducing latency in 95% of cases, while maintaining system stability in the remaining 5%.The tradeoff is that during bursts of updates, the debouncer will run the callback twice: once immediately, then again after the debounce period. While this is not ideal, it solves our two biggest issues: latency and data freshness.

class SmartDebounce {

private isBatching: boolean = false;

private debounceTimer: NodeJS.Timeout | null = null;

private readonly debounceInterval: number;

public hasQueuedUpdate: boolean = false;

constructor(debounceIntervalMs: number = 25) {

this.debounceInterval = debounceIntervalMs;

this.cleanup = this.cleanup.bind(this);

this.run = this.run.bind(this);

this.debounce = this.debounce.bind(this);

this.cleanup = this.cleanup.bind(this);

}

public run(callback: () => Promise<void> | void): void {

if (!this.isBatching) {

this.isBatching = true;

this.debounce(() => {

this.isBatching = false;

});

// Run the callback immediately

this.hasQueuedUpdate = false;

callback();

} else {

this.hasQueuedUpdate = true;

this.debounce(() => {

this.isBatching = false;

this.hasQueuedUpdate = false;

callback();

});

}

}

private debounce(callback: () => Promise<void> | void): void {

if (this.debounceTimer) {

clearTimeout(this.debounceTimer);

}

this.debounceTimer = setTimeout(() => {

callback();

}, this.debounceInterval);

}

public cleanup(): void {

if (this.debounceTimer) {

clearTimeout(this.debounceTimer);

}

}

}Optimizing further

While the debouncer has great bang-for-buck performance, there are further optimizations we can make to improve performance. For example, we could store re-render results to figure out which components in the tree are changing, then re-compute those nodes first on subsequent renders. For now though, we're quite pleased with the performance we've achieved.

Many developers use Compose to build complicated internal tools with huge tables, PDFs, charts, and other complex components. Through our optimization efforts, Compose maintains less than 100ms latency for more than 99.5% of apps - faster than most traditional client side web applications.

The Hidden Complexity of Scaling WebSockets

WebSockets are at the very core of Compose. Learn how we scaled them to support 1000s of concurrent users.

AI Coding for Lazy Developers

A battle-tested field guide to how we use AI to accelerate our coding at Compose.

Subscibe to our developer newsletter to get occasional emails when we publish new articles and updates.

2026 Compose. All rights reserved.